A Web Data ETL (Extract, Transform, Load) pipeline is a systematic process used in data engineering to collect, transform, and load data from various sources on the internet into a structured and usable format for analysis and storage. So, if you want to learn how to scrape data from a web page and build a Data ETL pipeline using the data collected from the web, this article is for you. In this article, I will take you through building a Web Data ETL pipeline using Python.

Web Data ETL Pipeline: Process We Can Follow

A Web Data ETL (Extract, Transform, Load) pipeline is a systematic process used in data engineering to collect, transform, and load data from various sources on the internet into a structured and usable format for analysis and storage. It is essential for managing and processing large volumes of data gathered from websites, online platforms, and digital sources.

The process begins with data extraction, where relevant information is collected from websites, APIs, databases, and other online sources.

Then this raw data is transformed through various operations, including cleaning, filtering, structuring, and aggregating. After transformation, the data is loaded into a CSV file or database, making it accessible for further analysis, reporting, and decision-making.

Web Data ETL Pipeline using Python

Now let’s see how to build a Web Data ETL pipeline using Python. Here I will create a pipeline to scrape textual data from any article on the web. We will aim to store the data about the frequencies of each word in the article. I’ll start this task by importing the necessary Python libraries:

# use command for installing beautifulsoup and nltk: pip install beautifulsoup4 nltk

import requests

from bs4 import BeautifulSoup

from nltk.corpus import stopwords

from collections import Counter

import pandas as pd

import nltk

nltk.download('stopwords')Now let’s start by extracting text from any article on the web:

class WebScraper:

def __init__(self, url):

self.url = url

def extract_article_text(self):

response = requests.get(self.url)

html_content = response.content

soup = BeautifulSoup(html_content, "html.parser")

article_text = soup.get_text()

return article_textIn the above code, the WebScraper class provides a way to conveniently extract the main text content of an article from a given web page URL. By creating an instance of the WebScraper class and calling its extract_article_text method, we can retrieve the textual data of the article, which can then be further processed or analyzed as needed.

As we want to store the frequency of each word in the article, we need to clean and preprocess the data. Here’s how we can clean and preprocess the text extracted from the article:

class TextProcessor:

def __init__(self, nltk_stopwords):

self.nltk_stopwords = nltk_stopwords

def tokenize_and_clean(self, text):

words = text.split()

filtered_words = [word.lower() for word in words if word.isalpha() and word.lower() not in self.nltk_stopwords]

return filtered_wordsIn the above code, the TextProcessor class provides a convenient way to process text data by tokenizing it into words and cleaning those words by removing non-alphabetic words and stopwords. It is often a crucial step in text analysis and natural language processing tasks. By creating an instance of the TextProcessor class and calling its tokenize_and_clean method, you can obtain a list of cleaned and filtered words from a given input text.

So till now, we have defined classes for scraping and preparing the data. Now we need to define a class for the entire ETL (Extract, Transform, Load) process for extracting article text, processing it, and generating a DataFrame of word frequencies:

class ETLPipeline:

def __init__(self, url):

self.url = url

self.nltk_stopwords = set(stopwords.words("english"))

def run(self):

scraper = WebScraper(self.url)

article_text = scraper.extract_article_text()

processor = TextProcessor(self.nltk_stopwords)

filtered_words = processor.tokenize_and_clean(article_text)

word_freq = Counter(filtered_words)

df = pd.DataFrame(word_freq.items(), columns=["Words", "Frequencies"])

df = df.sort_values(by="Frequencies", ascending=False)

return dfIn the above code, the ETLPipeline class encapsulates the end-to-end process of extracting article text from a web page, cleaning and processing the text, calculating word frequencies, and generating a sorted DataFrame. By creating an instance of the ETLPipeline class and calling its run method, you can perform the complete ETL process and obtain a DataFrame that provides insights into the most frequently used words in the article after removing stopwords.

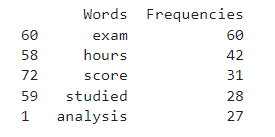

Now here’s how to run this pipeline to scrape textual data from any article from the web and count the frequency of each word in the article:

if __name__ == "__main__":

article_url = "https://amankharwal.medium.com/what-is-time-series-analysis-in-data-science-f89aaa1c0814"

pipeline = ETLPipeline(article_url)

result_df = pipeline.run()

print(result_df.head())

So this is how you can build a Web Data ETL pipeline using Python. Find the full code here.

Summary

A Web Data ETL (Extract, Transform, Load) pipeline is a systematic process used in data engineering to collect, transform, and load data from various sources on the internet into a structured and usable format for analysis and storage. I hope you liked this article on building a Web Data ETL pipeline using Python. Feel free to ask valuable questions in the comments section below.