Hyperparameter tuning is a critical step in optimizing machine learning models for better performance. In this article, we’ll explore hyperparameter tuning techniques, specifically GridSearchCV and RandomizedSearchCV, applied to the Random Forest algorithm using the heart disease dataset. We’ll demonstrate how these techniques can help improve the accuracy and generalization of the model.

Overview

This is a classification problem. We are gonna make use of heart disease dataset for our task. For optimizing machine learning models hyperparameter tuning is very necessary. We will see GridSearchCV and RandomizedSearchCV techniques for tuning. Access dataset here.

Understanding Random Forest

Random Forest is an ensemble learning algorithm that builds multiple decision trees during training and combines their predictions to improve accuracy and reduce overfitting. It randomly selects subsets of features and data samples to build each tree, making it robust and effective for classification tasks like predicting heart disease.

Heart Disease Dataset

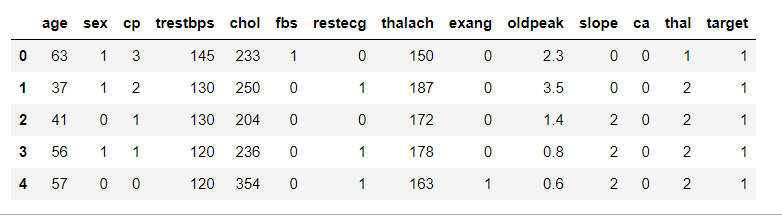

The heart disease dataset contains various medical attributes such as age, sex, cholesterol levels, and other factors that may influence the presence of heart disease. The target variable indicates whether a patient has heart disease (1) or not (0). We’ll use this dataset to train and tune our Random Forest model.

Hyperparameter Tuning Techniques

- GridSearchCV: GridSearchCV is an exhaustive search technique that evaluates a specified grid of hyperparameters for a given estimator. It performs cross-validation on all possible combinations of hyperparameters and selects the combination with the best performance based on a scoring metric.

- RandomizedSearchCV: RandomizedSearchCV is a randomized search technique that samples hyperparameter values from specified distributions. It conducts a fixed number of iterations and selects the best combination of hyperparameters based on the scoring metric.

Get data and libraries

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier,GradientBoostingClassifier

from sklearn.svm import SVC

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

df = pd.read_csv('heart.csv')Show data preview

df.head()

We have total 303 rows and 14 columns in our dataset.

df.shapeLets extract X and y as input and target columns from the dataset.

X = df.iloc[:,0:-1]

y = df.iloc[:,-1]Do separate training and testing data using train test and split.

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.2,random_state=42)

print(X_train.shape)

print(X_test.shape)Take the random forest classifier and check the accuracy score.

rf = RandomForestClassifier()

rf.fit(X_train,y_train)

y_pred = rf.predict(X_test)

accuracy_score(y_test,y_pred)Accuracy score is around 0.8524590163934426.

Compare the random forest algorithm with other algorithms as well such as gradient boosting classifier, SVC, logistic regression and many more. Now take the gradient boosting classifier and check the accuracy score.

gb = GradientBoostingClassifier()

gb.fit(X_train,y_train)

y_pred = gb.predict(X_test)

accuracy_score(y_test,y_pred)The accuracy score for gradient boosting classifier is around 0.7704918032786885.

Take the SVM classifier and check the accuracy score.

svc = SVC()

svc.fit(X_train,y_train)

y_pred = svc.predict(X_test)

accuracy_score(y_test,y_pred)The accuracy score for SVM classifier is around 0.7049180327868853.

Same way check for logistic regression as well.

lr = LogisticRegression()

lr.fit(X_train,y_train)

y_pred = lr.predict(X_test)

accuracy_score(y_test,y_pred)The accuracy score for logistic regression classifier is around 0.8852459016393442. As you can see clearly that random forest classifier performs more better than other algorithms except logistic regression. Now lets make use of some hyperparameters to increase the performance of model even more for random forest classifier.

Note: after using cross validation on random forest the accuracy might decrease.

We are using max_samples as the hyperparameter for number of rows passing.

rf = RandomForestClassifier(max_samples=0.75,random_state=42)

rf.fit(X_train,y_train)

y_pred = rf.predict(X_test)

accuracy_score(y_test,y_pred)And the accuracy increased a little to 0.9016393442622951. By using cross validation we can get accurate score for random forest.

from sklearn.model_selection import cross_val_score

np.mean(cross_val_score(RandomForestClassifier(max_samples=0.75),X,y,cv=10,scoring='accuracy'))There are lot of parameters in random forest classifier. The question is, how do we know what value we should take for which parameter to get better performance. The solution is hyperparameter tuning. The very famous way to do hyperparameter tuning is GridSearchCV and RandomizedSearchCV.

Perform Hyperparameter Tuning Using GridSearchCV

- Define the grid of hyperparameters to search.

- Use GridSearchCV to find the best combination of hyperparameters.

The GridSearchCV is a technique used for hyperparameter tuning in machine learning. It is part of the scikit-learn library in Python and is widely used for finding the best combination of hyperparameters.

# Number of trees in random forest

n_estimators = [20,60,100,120]

# Number of features to consider at every split

max_features = [0.2,0.6,1.0]

# Maximum number of levels in tree

max_depth = [2,8,None]

# Number of samples

max_samples = [0.5,0.75,1.0]

# total 108 diff random forest trainMake the dictionary of parameters.param_grid = {'n_estimators': n_estimators,

'max_features': max_features,

'max_depth': max_depth,

'max_samples':max_samples

}

print(param_grid)rf = RandomForestClassifier()Pass all the parameters and train the model again.

from sklearn.model_selection import GridSearchCV

rf_grid = GridSearchCV(estimator = rf,

param_grid = param_grid,

cv = 5,

verbose=2,

n_jobs = -1)

rf_grid.fit(X_train,y_train)Now lets see what are the best parameters that we can make use of.

rf_grid.best_params_And the best score will be 0.8346088435374149.

You can do the same thing with random search cv as well. It is useful when there is large amount of data given. Lets perform the same process with different parameters for random forest again.

# Number of trees in random forest

n_estimators = [20,60,100,120]

# Number of features to consider at every split

max_features = [0.2,0.6,1.0]

# Maximum number of levels in tree

max_depth = [2,8,None]

# Number of samples

max_samples = [0.5,0.75,1.0]

# Bootstrap samples

bootstrap = [True,False]

# Minimum number of samples required to split a node

min_samples_split = [2, 5]

# Minimum number of samples required at each leaf node

min_samples_leaf = [1, 2]Show the parameter grid dictionary.

param_grid = {'n_estimators': n_estimators,

'max_features': max_features,

'max_depth': max_depth,

'max_samples':max_samples,

'bootstrap':bootstrap,

'min_samples_split':min_samples_split,

'min_samples_leaf':min_samples_leaf

}

print(param_grid)Use RandomizedSearchCV class of sklearn.

from sklearn.model_selection import RandomizedSearchCV

rf_grid = RandomizedSearchCV(estimator = rf,

param_distributions = param_grid,

cv = 5,

verbose=2,

n_jobs = -1)Train the model again using fit method.

rf_grid.fit(X_train,y_train)See the best parameters and best score.

rf_grid.best_params_rf_grid.best_score_And the best score will be 0.8220238095238095. This method is much faster than GridSearchCV . Access the full code here.

Hyperparameter tuning is a crucial step in optimizing machine learning models for better performance. In this article, we demonstrated the use of GridSearchCV and RandomizedSearchCV techniques to tune the hyperparameters of a Random Forest classifier on the heart disease dataset. By systematically searching through the hyperparameter space, we can find the optimal combination of hyperparameters that improves the model’s accuracy and generalization ability. These techniques enable us to fine-tune our models and achieve better results in real-world applications, such as predicting heart disease risk in patients.